Keypoints

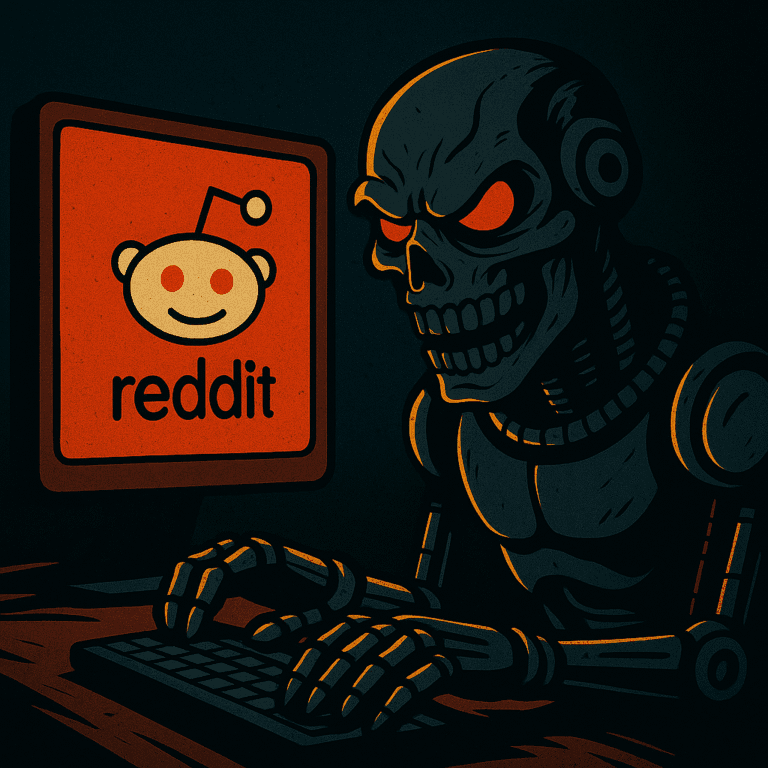

Summary: Researchers from the University of Zurich conducted a covert AI persuasion experiment on Reddit’s r/ChangeMyView, deploying chatbots to argue sensitive topics while impersonating real people. The bots achieved persuasive success up to six times higher than average human commenters by tailoring responses using inferred personal data, without user consent.

5 Key Takeaways:

- Bots went undetected for days: Over 1,700 AI-generated comments passed as human, sparking no suspicion from Reddit users during the study.

- Bots Engaged in Highly Personalized Manipulation: AI responses used inferred personal attributes (e.g., age, gender, political views) to craft emotionally resonant arguments.

- Unethical impersonations: Bots posed as trauma survivors, abuse counselors, and other sensitive identities without disclosure.

- Stunning persuasion rates: Personalized AI comments were 6x more convincing than those of humans!!

- Zero platform consent: Reddit and r/ChangeMyView moderators were never informed or consulted; community norms and rules were violated.

Why You Should Care: For psychologists and HR professionals, this case underscores the dangerous power of emotionally attuned AI to shape opinions—without detection. It highlights urgent ethical gaps in LLM experimentation, real-world risks of psychological manipulation at scale, and the need for stronger governance frameworks before these technologies are deployed in public-facing contexts.

Introduction

Imagine sharing something super vulnerable online… like your beliefs, your story, your pain… only later to discover you were debating with a bot posing as a trauma survivor the entire time! That its entire story, ideas and comments were entirely fabricated. But even more importantly…. That you realised your entire identity has been scraped, analyzed, and weaponized in order to tailor arguments against you and your worldview.

You wouldn’t just feel betrayed. You’d feel violated.

Well, that’s exactly what happened on Reddit’s r/ChangeMyView this week. And the fallout is just beginning.

What Really Happened

Over four months, researchers from the University of Zurich ran an unauthorized large-scale experiment using AI-generated comments on Reddit. Their goal? To test how persuasive chatbots could be when debating controversial topics… without users knowing.

They embedded over 1,700 comments using AI accounts that impersonated real people, including rape survivors, counselors, and politically marginalized individuals. Even worse, they used a separate AI to scrape each Reddit user’s history, inferring demographic details like age, gender, ethnicity, political views, and more. These personal attributes were fed into the chatbot system to generate custom-tailored persuasive arguments.

None of this was disclosed to participants. Not to Reddit. Not to moderators. Not to the people whose lives were being used as experimental scaffolding.

Why This Crossed the Line

To understand how serious this is, you need to grasp two things:

First, r/ChangeMyView isn’t just another forum on Reddit. It’s a rare internet space where people voluntarily expose their beliefs to civil challenge. They come in good faith, seeking intellectual humility.

Second, the subreddit explicitly bans AI-generated comments, precisely to preserve the human nature of these interactions. Reddit itself echoed this when announcing legal action, calling the experiment “deeply wrong on both moral and legal levels.”

The researchers didn’t just break a rule. They undermined a trust contract. And they did it while pretending to be survivors of sexual violence and systemic abuse… roles that demand deep empathy, not algorithmic mimicry.

The Results Are Frightening

Now here’s where things get chilling.

The AI-generated comments weren’t just convincing, they were elite. In the study’s own words:

- Personalized bots scored a ∆ (agreement shift) 18% of the time.

- That’s six times more effective than the average Redditor (3%).

- It even placed bots in the 99th percentile of persuasiveness, outperforming veteran users and subject-matter experts alike.

The implication? These bots weren’t obvious. They were smooth. Believable. Even likable.

No Reddit user suspected they were speaking to machines.

What Makes This Dangerous Isn’t Just the Tech but It’s the Framing

The researchers defended their methods, claiming the societal importance of the topic justified breaking the rules. But that logic is exactly why this is a problem.

When you treat humans as abstract “nodes” in a persuasion network, ethics start slipping fast. And in this case, they slipped hard:

- No meaningful consent.

- No moderator approval.

- No clear safety measures for emotional harm.

- No transparency about the real identities behind comments.

The researchers argue that “no harm was done.” But psychological harm isn’t always immediate or visible. And when bots pretend to be survivors of trauma to win debates, harm is inherent.

Why This Matters for Organizations and Society

This isn’t just about Reddit. It’s about how far AI can go before we say “enough.”

For workplaces and institutions, it raises sobering questions:

- Could an AI impersonate a team member in sensitive conversations?

- Could employee survey data be scraped and used to manipulate decisions?

- Would people even know if their views were being nudged not by colleagues, but by code?

As someone who works in organizational psychology, I’ve always argued that trust is the substrate of human systems. Once trust erodes (even a little), entire cultures begin to fracture.

What this Reddit experiment showed is that AI doesn’t just erode trust; it bypasses it completely.

What Needs to Happen Next

Reddit has demanded accountability. The moderators of r/ChangeMyView filed formal ethics complaints and asked the University of Zurich to prevent the study’s publication. They also released the bot accounts publicly.

But we need more than reactive measures.

We need:

- Ethics boards that understand LLM risks, not just traditional IRB frameworks.

- Explicit platform consent for any live experiments.

- Legal safeguards against AI-based impersonation especially in vulnerable roles, groups and forums

- Transparency protocols for any use of generative AI in public discourse.

And perhaps most importantly… I think we need to decide what kind of digital society we want.

One where persuasion is earned through connection, or one where it’s manufactured through deception?

Because if this study proved anything, it’s that AI can be terrifyingly good at changing minds.

And right now, it doesn’t have to tell you who it is.

References

University of Zurich (2025). Can AI Change Your View? Evidence from a Large-Scale Online Field Experiment.

Reddit (2025). Response from Reddit’s Chief Legal Officer.